- Published on

SoundFit: Soundtrack Fitting for Age

- Authors

- Name

- Putra Zakaria Muzaki

Soundtrack Fitting for Age (SoundFit)

SoundFit is an innovative application that utilizes machine learning technology to detect a user's age through facial analysis and recommends appropriate music playlists. Built with a sophisticated tech stack, SoundFit aims to provide a highly personalized and interactive music experience.

NOTE

This project was developed as a Final Project (PBL) at Politeknik Negeri Malang, focusing on the implementation of Canny Edge Detection and Neural Networks.

Motivation

In the digital era, music streaming is ubiquitous, but few apps utilize age as a primary factor for recommendations. Research indicates that musical taste is significantly influenced by age; teenagers often prefer energetic genres like pop/rock, while adults may prefer calmer genres like jazz or classical.

SoundFit bridges this gap by using facial recognition to classify age and curate playlists that bridge generational differences in musical preference.

How It Works

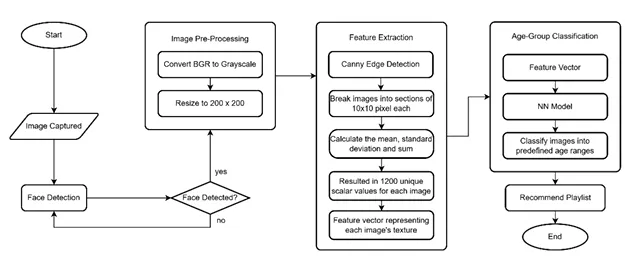

The system follows a structured pipeline to process user data:

- Image Pre-processing: The captured image is converted to grayscale and resized to 200x200 pixels.

- Face Detection: The system validates if a face is present in the image.

- Feature Extraction: Using Canny Edge Detection, the image is analyzed for textural features (like wrinkles) which are strong indicators of age.

- Classification: A Neural Network (NN) model processes the feature vectors to classify the user into a specific age group.

- Recommendation: A playlist is generated based on the predicted age group.

Figure: The system architecture from image capture to playlist recommendation.

Figure: The system architecture from image capture to playlist recommendation.Key Features

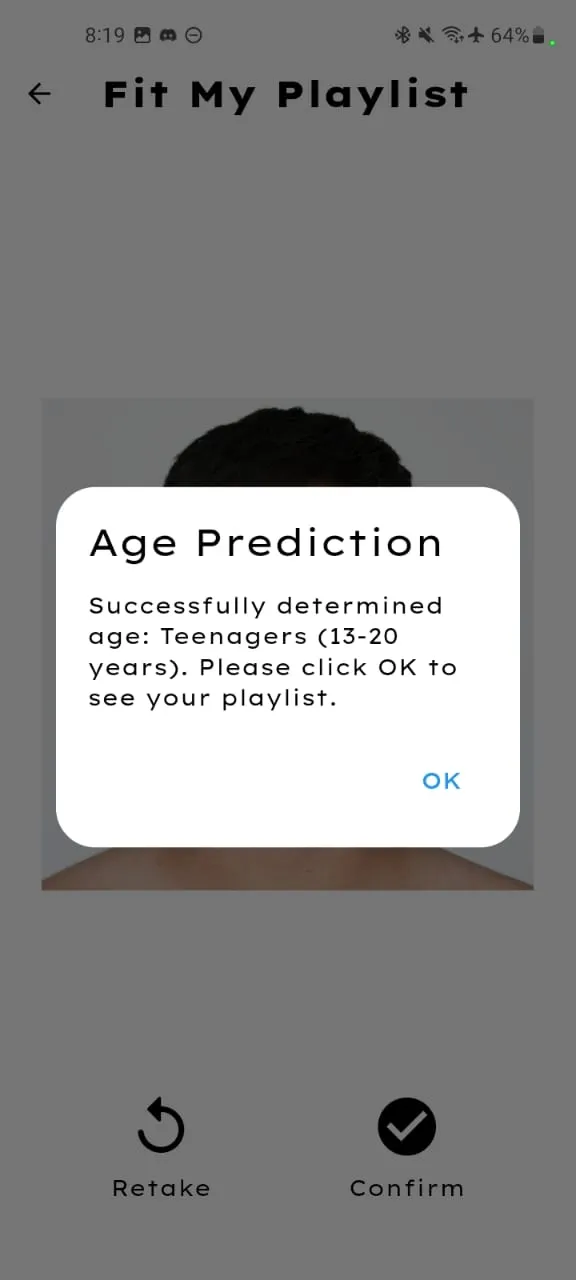

1. Age Detection

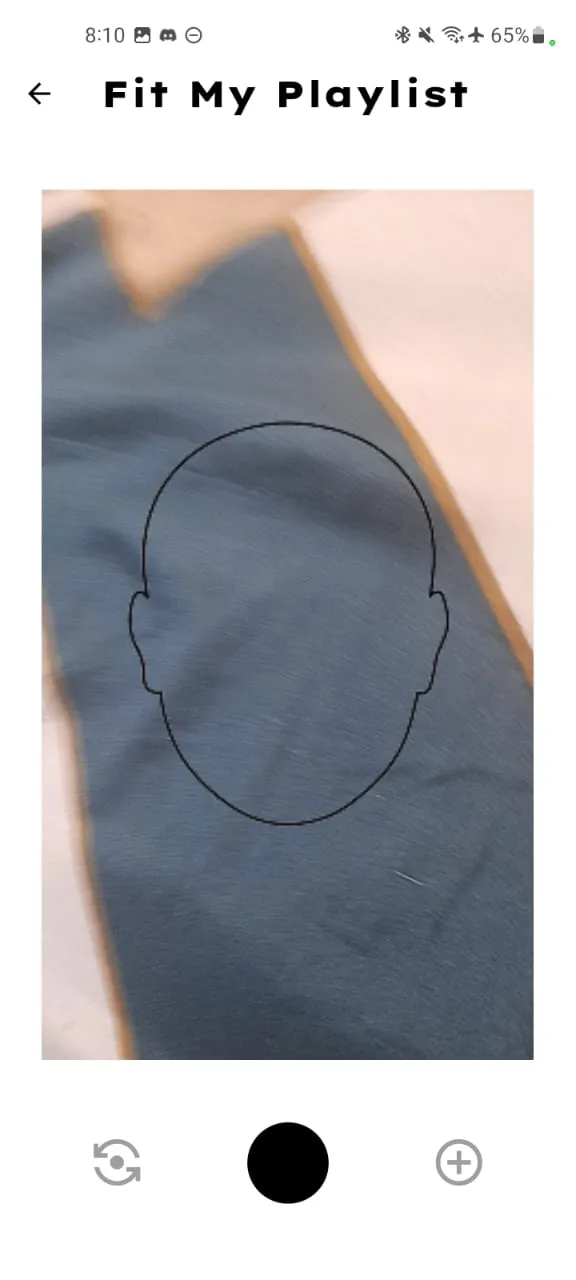

The app analyzes the user's face via the camera to classify them into age groups (e.g., Teenagers, Adults, Elderly).

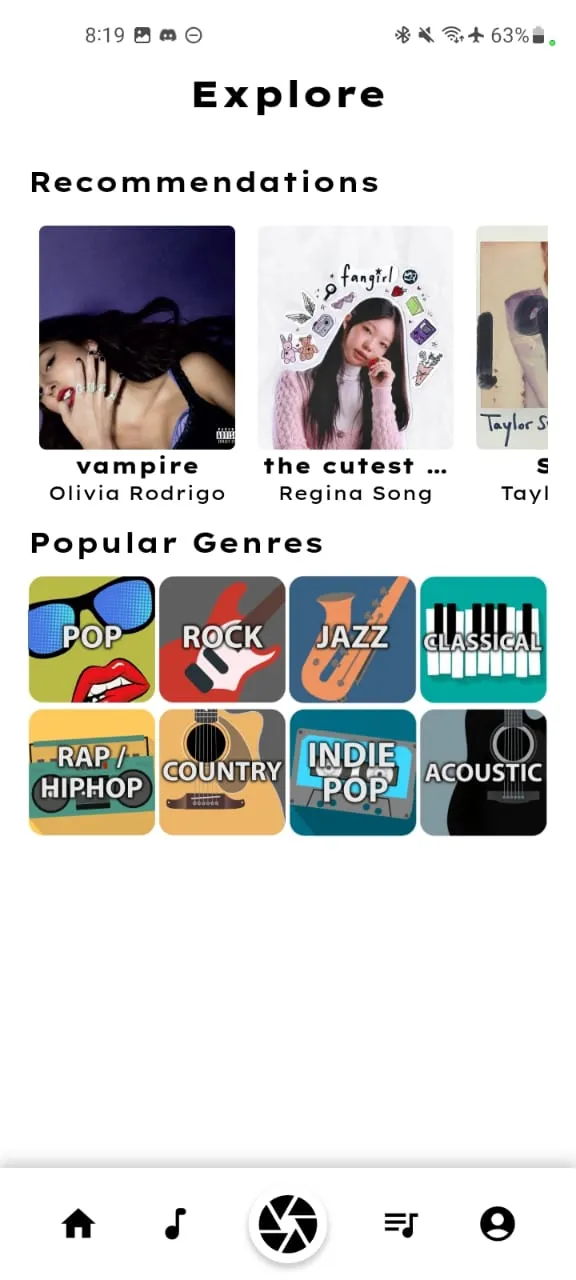

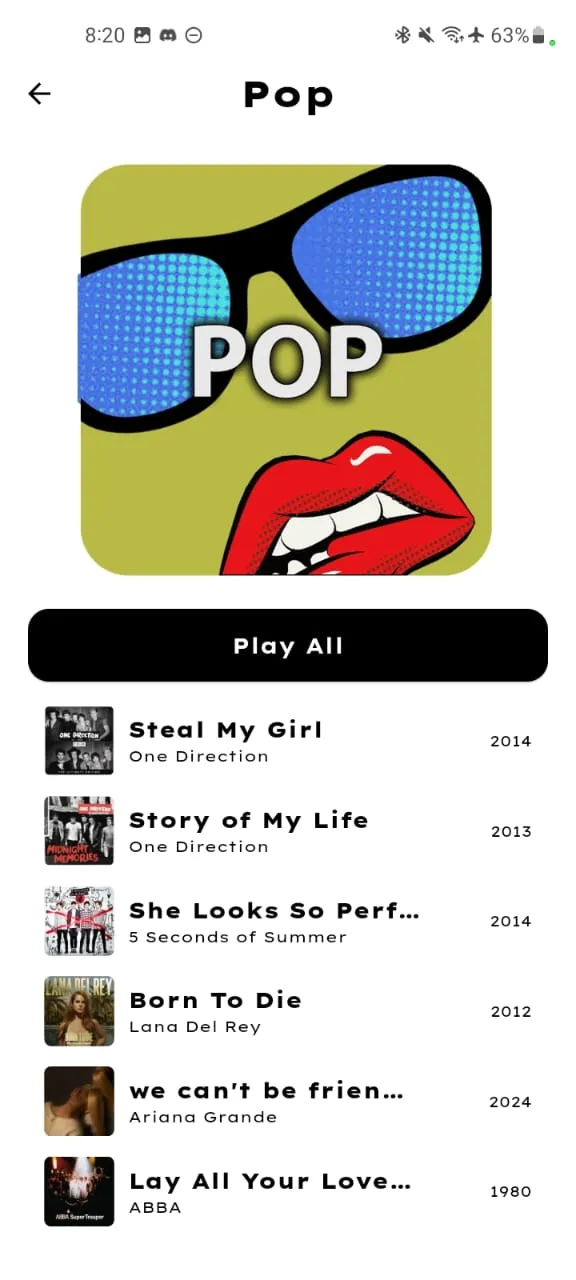

2. Curated Playlists

Based on the analysis, SoundFit provides music lists relevant to the user's age demographics and mood.

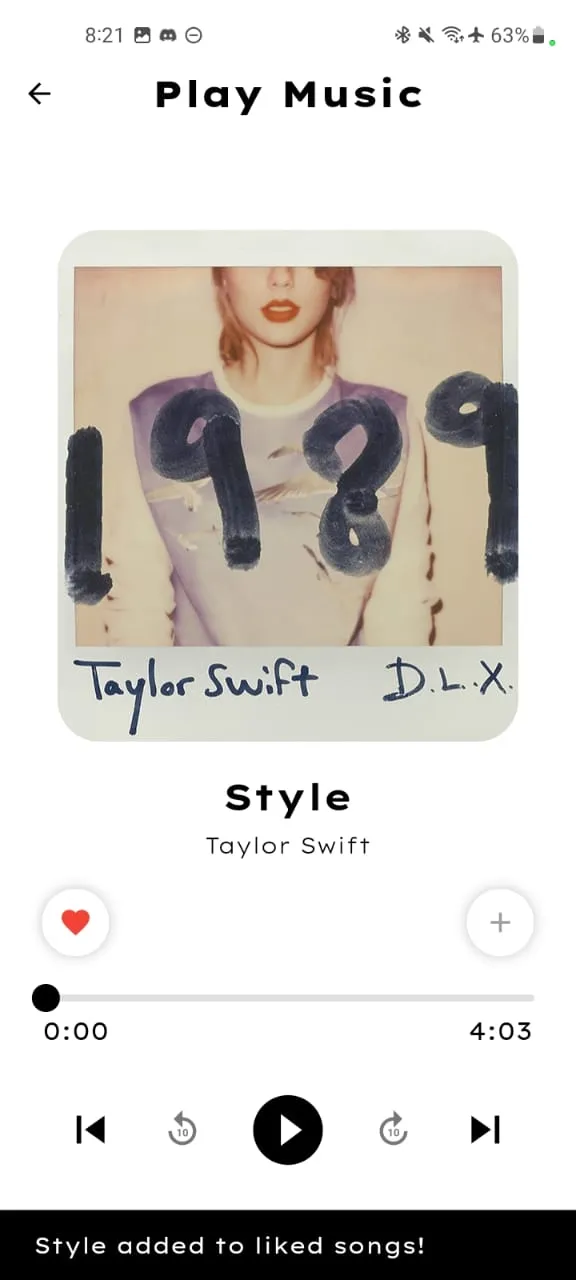

3. Music Integration

Users can play music directly within the app through integration with the Spotify API.

Tech Stack

The development followed the Waterfall methodology and utilized the following technologies:

- Frontend: Flutter (Dart) for cross-platform mobile development (Android/iOS).

- Backend: Firebase for authentication, real-time database (Firestore), and cloud functions.

- Machine Learning: Python with Flask API. The model uses Neural Networks (Multi-layer Perceptron) for classification.

- Design: Figma for wireframing and prototyping.

Challenges & Solutions

During development, the team faced and overcame several technical hurdles:

- Low Model Accuracy: Initial tests showed low accuracy. This was resolved by re-evaluating the dataset, improving preprocessing, and fine-tuning hyperparameters.

- Unstable Spotify API: To handle API limits and failures, a retry mechanism and local caching were implemented.

- State Management: Implementing BLoC pattern required deep self-study and gradual implementation from simple to complex features.

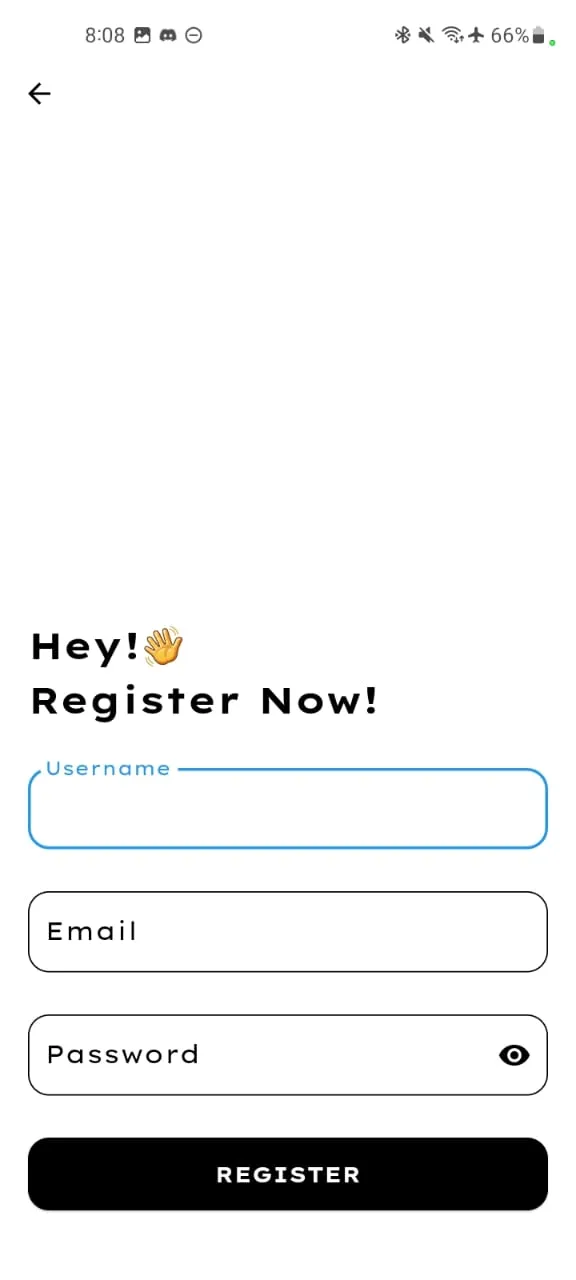

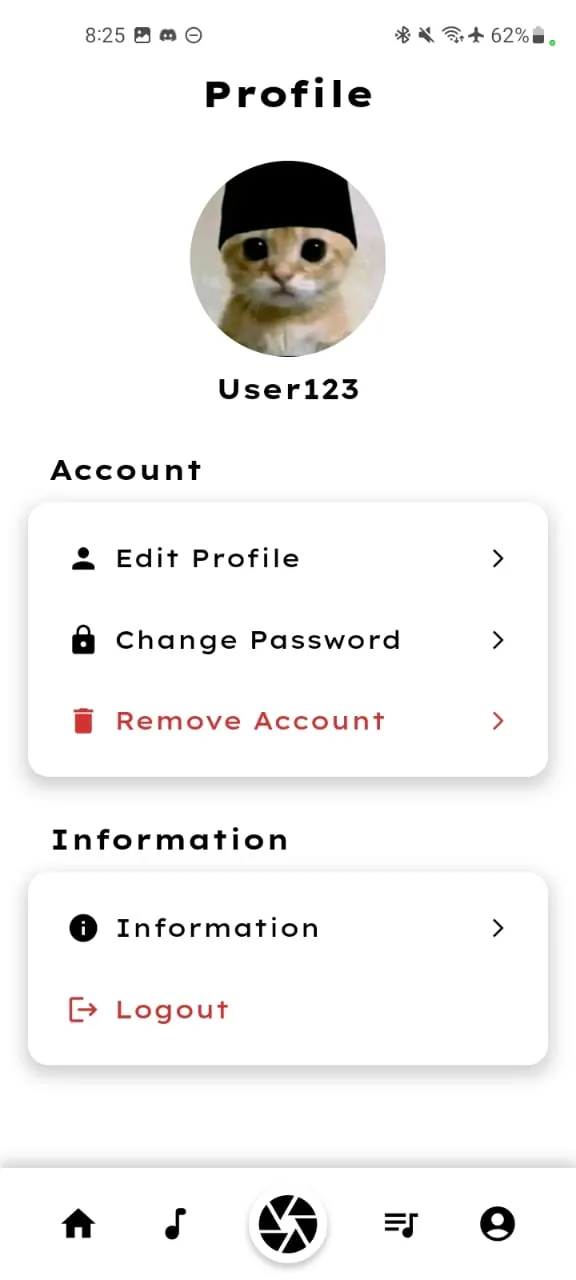

User Interface

The application features a clean, intuitive interface built with Flutter.

The Team

- Putra Zakaria Muzaki

- Amanda Vanika Putri